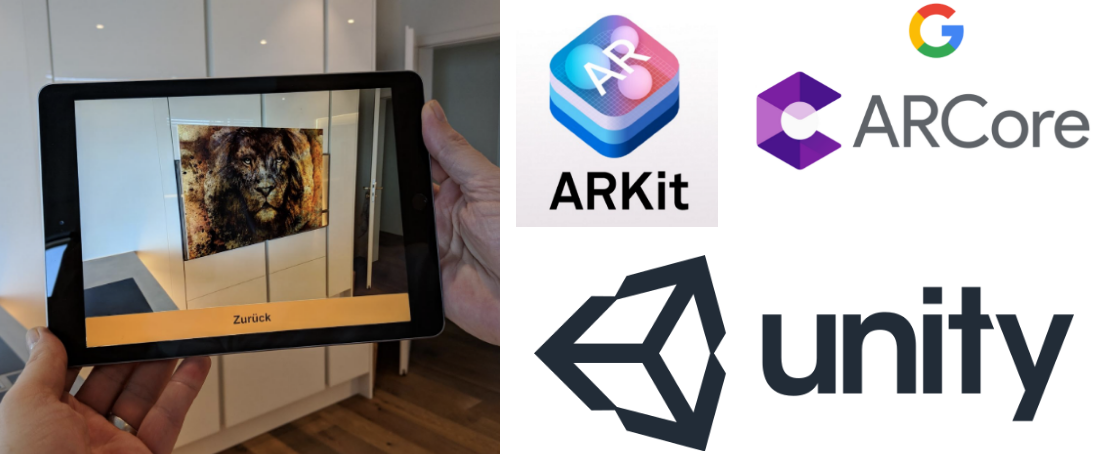

Augmented Reality App to visualize images in the living room

This post is a summary of my final study thesis. The goal of the project was to evaluate the current state of mobile AR and to find out what is possible in terms of visualizing an image on a wall and adjusting the image rendering based on viewing angle of the user.

I developed a proof of concept mobile application which allows a user to select an image from an e-commerce store and place it on a wall. The app runs on both Android and IOS. I have released a stripped down open source version of the app on my GitHub, you can check it out here.

Analysis

The aim of the analysis was to gain a more detailed understanding and overview of the current augmented reality technologies. The following questions had to be answered.

- What exactly is Augmented Reality?

- How do current AR technologies work?

- Which devices support Augmented Reality?

- What AR frameworks are available?

Augmented Reality

Augmented Reality (AR) is the extension of the real world with virtual objects and information. Virtual objects can be projected as 3D models directly on top of physical objects in real time.

AR is displayed in the user's field of vision. In order to provide the most realistic experience possible, the projection must be recalculated and displayed whenever there is a change in the field of view, such as an adjustment of the viewing angle or an approximation to an object.

Differentiation to Virtual Reality

In Virtual Reality (VR) a complete virtual environment is created. This leads to a significant difference between AR and VR. In VR the environment is modeled beforehand, therefore the full environment is already known at the time of the development of the application. In AR, the environment is not known at the time of the development, this leads to the fact that the environment must be analyzed at the runtime of the application, in order to make an interaction possible at all. The analysis of the environment can be solved in many different ways.

Kinds of Augmented Reality

Marker-based AR

Marker-based AR uses a specific visual object "Marker" that is placed in the user's environment. The dimensions and content of the marker are already known during the development of the application. If the marker is recognized in the environment, it can function as a reference point for the placement of further virtual objects in the user's field of vision. A marker can have any shape, such as a printed QR code or a $1 bill.

Markerless AR

As the name implies, Markerless AR does not require any markers. To enable Markerless AR, the environment must be analyzed as precisely as possible with the help of the camera and other sensors. Specific Feature points in the environment are detected and act as reference points for virtual objects. Markerless AR is made possible by the combined use of the camera and sensors like compass, gyroscope and accelerometer. Depth sensors enable even more efficient detection of the environment.

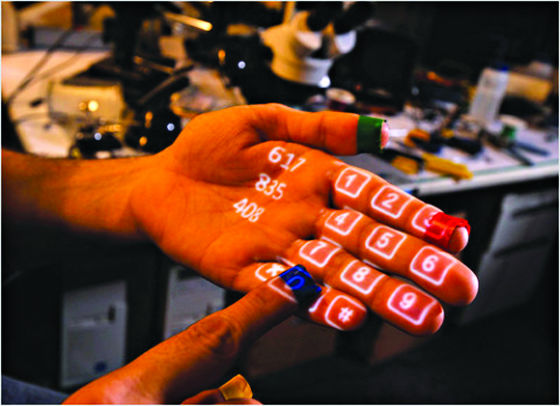

Projection based AR

Another type of AR is the direct projection of light onto the surface of the real world. Projection based AR allows not only the enhancement of one user's field of vision but can also be perceived by several users simultaneously. Interactions are made possible by the use of sensors that can detect the interruption of the projected light. The technology is still in an experimental state. Advanced versions of Projection based AR technologies even allow objects to be projected directly in the air without the need for a surface. Star Wars holograms are a well-known fictional example of this technology, which could become reality in the future.

Augmented Reality Technologies

AR can be achieved by many different technologies. Most often it is a combination of different technologies that power the current AR applications.

Computer Vision

Computer vision algorithms are a critical component to enable AR. CV algorithms enable the recognition of objects in the user's environment in near realtime. Algorithms for the recognition of markers from different perspectives enable "Marker based AR applications". Computer Vision is also used for the recognition of surfaces and feature points for Markerless AR. These algorithms use advanced machine learning models to enable an efficient recognition of the environment. There will be more and more advances in the ability to recognize and model the world around us using only computer vision.

IMU

Inertial measurement unit (IMU) is a combination of sensors that are used to localize the user in the environment. IMU's are currently embedded in almost all mobile devices.

An IMU contains the following sensors:

- Accelerometer (acceleration sensor)

- Gyroscope (rotation sensor)

- Magnetometer (magnetic field sensor)

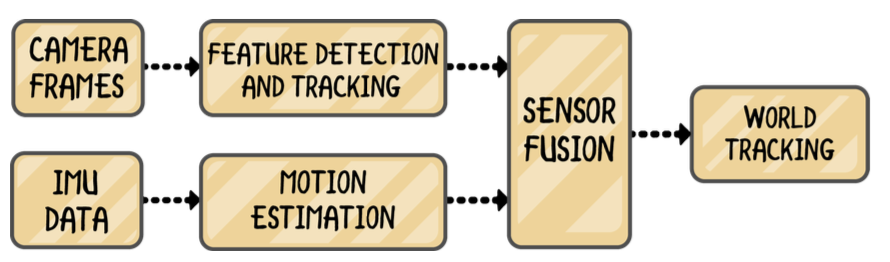

S.L.A.M

Simultaneous localization and mapping (SLAM) is a technology that uses a combination of camera and IMU sensor data to capture and update the environment and the user's current position in that environment in near real-time.

Camera data is analyzed with computer vision algorithms to detect feature points and their motion. At the same time, the IMU data is analyzed and synchronized with the analysis of the camera data. Both methods by themselves are very error-prone but combined they can produce a fairly accurate model of the environment and the user's position in that environment. This process is called Sensor-Fusion.

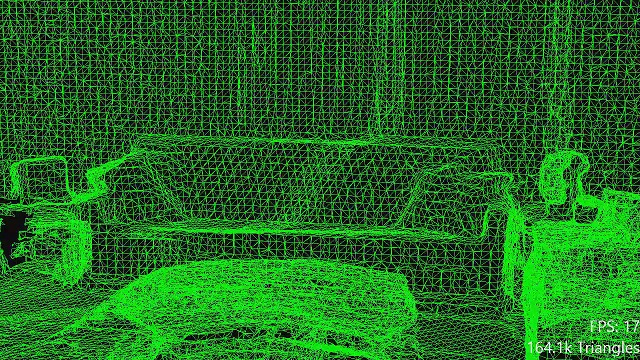

Spatial Mapping

Spatial mapping entails a complete, detailed modeling of the user's environment. For efficient spatial mapping, additional depth sensors that allow perceiving more accurate depth information are required.

Augmented Reality Devices

AR Devices can be divided into different categories. The two main categories are Head Mounted and Handheld Devices. The focus of this thesis was on handheld mobile devices.

Android

High-end Android mobile devices enable markerless AR with the use of the ARCore framework developed by Google.

| Sensor | Camera / Accelerometer / Gyroscope |

|---|---|

| Platform | >= Android 7.0 |

| Manufacturer Models | Acer Chromebook, Asus ROG Phone, Google Nexus, Google Pixel, HMD Nokia, Samsung Galaxy, Sony Xperia View full list |

| Number of Devices ( ARCore Support ) | > 100 Million ( artillry.co, 2018) |

IOS

iPhones running iOS enable markerless AR with the ARKit framework developed by Apple.

| Sensor | Camera / Accelerometer / Gyroscope/ Front Depth Sensor ( iPhone X ) |

|---|---|

| Platform | >= iOS 11.0 |

| Manufacturer Models | iPhone ( SE , 6, 6S, 6S Plus, 7, 7 Plus, 8, X ) iPad ( 2016 Pro , 2017, 2018 ) |

| Number of Devices ( ARCore Support ) | > 300 Million |

AR Frameworks

The following frameworks all support Markerless AR on mobile devices.

ARKit

|

ARKit is the AR framework developed by Apple. ARKit is designed for iOS devices and runs only on iOS. Apple calls ARKit the largest AR platform in the world, as over 300 million devices support ARKit since the iOS 11 update in 2017. |

|---|---|

| Features | - Motion/Orientation Tracking - Environmental Understanding - Light Estimation - User interaction - Horizontal Plane Detection - Vertical Plane Detection - Face Tracking - Image Recognition - Persistent World Maps - World Sharing - Image Tracking - Object Detection |

| Release date | June 2017 |

| Current release | 2.0 (state: Jan 2019) |

| Platform support | >= iOS 11.0 |

| Language support | Objective-C / Swift |

| License | Free |

ARCore

|

ARCore is the AR Framework from Google. It is the successor of a previous project called "Tango". Tango already supported markerless AR, but needed a depth sensor. The ARCore framework no longer requires a depth sensor and allows markerless AR using the camera and IMU sensors similar to the ARKit framework. The framework is not included in Android, but it can be downloaded on the first time an ARCore app is started. |

|---|---|

| Features | - Motion/Orientation Tracking - Environmental Understanding - Light Estimation - User interaction - Horizontal Plane Detection - Vertical Plane Detection - Augmented Images - Cloud Anchors |

| Release date | August 2017 |

| Current release | 1.6.0 (state: Jan 2019) |

| Platform support | >= Android 7.0 , Cloud Anchors support iOS |

| Language support | C / C++ / Java / Kotlin |

| License | Free |

ARFoundation

|

Unity is a widely known game engine. Unity is used to develop 3D based applications. Augmented reality applications mostly use 3D models to place them as objects in the environment. Therefore Unity is very well suited for the development of AR applications. Unity supports plugins for ARKit and ARCore. However, when these plugins are used directly, one is always developing specifically for one of the two platforms. ARFoundation combines the common functions of ARKit and ARCore via a standardized interface which enables cross platform AR development. |

|---|---|

| Features | - Motion/Orientation Tracking - Environmental Understanding - Light Estimation - User interaction - Horizontal Plane Detection - Vertical Plane Detection |

| Release date | May 2018 |

| Current release | 1.0.0 (preview) included in Unity 2018.1 |

| Platform support | >= Android 7.0 / >= iOS 11.0 |

| Language support | C# |

| License | Unity license |

Wikitude

|

The Wikitude SDK contains a framework for the development of professional augmented reality applications. The SDK supports a large number of platforms and is suitable for enterprise use. |

|---|---|

| Features | - Motion/Orientation Tracking - Environmental Understanding - Light Estimation - User interaction - Horizontal Plane Detection - Vertical Plane Detection - Save & Load Instant Targets - SMART support (ARCore / ARKit) - Object & Scene Recognition - Object & Scene Tracking - Image Recognition - Image Tracking - Long distance recognition - Multiple Image Targets - Distance to Image Target |

| Release date | first version in October 2008. Jan 2017 with new SLAM engine |

| Current release | 8.2 (state: Jan 2019) |

| Platform support | - iOS - Android - Windows - Smart Glasses - Web |

| Language support | C / C++ / Java / C# / JavaScript |

| License | SDK Pro 2490€ / Year |

6D.AI

Another promising AR framework is developed by 6D.AI. The framework seems to support more advanced occlusion and cross platform persistence.

At the time of writing the framework is in private beta and device support is still limited.

Conception

During the analysis, I played with the Unity Engine and learned the basic concepts. Unity is already widely used for game and 3D applications, making it a good starting point for developing AR applications.

I decided to build the app with the ARFoundation Unity framework. This allowed me to have a single code base with cross-platform support for iOS and Android.

Libraries

The app makes use of the following libraries

Unity ARCore XR

The ARCore XR Unity plugin is used underneath by the ARFoundation framework for AR code running on the Android ARCore platform.

Unity ARKit XR

The ARKit XR Unity plugin is used underneath by the ARFoundation framework for AR code running on the iOS ARKit platform.

Unity AR Foundation

The AR Foundation Unity Plugin provides a common interface for the development of AR applications with support for iOS and Android. Depending on the Unity Editor Platform configuration, ARCore or ARKit is used. The entire code of the application of the app is implemented using the ARFoundation interfaces.

Lean Touch

The Lean Touch Library can be downloaded through the Unity Asset Store and the basic version is free. The Library is used to implement touch gestures such as zoom, rotate and other touch interactions.

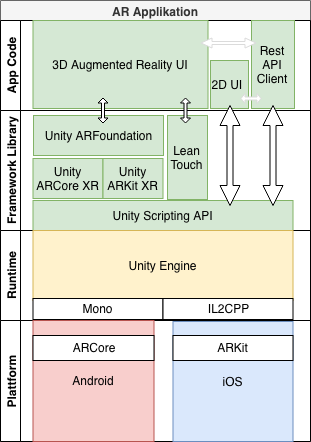

Architecture

The image shows the entire application architecture with the various components and their position in the hierarchy from app code to the executing platform.

The color green represents C# Code. The complete application code is developed in C# to be platform independent.

The application code is divided into the following 3 modules:

3D Augmented Reality UI: Code used to visualize the image in the user's environment.

2D UI: Code for selecting an image. The 2D UI is also implemented directly with Unity.

Rest API Client: Code for accessing a Shop Rest API to search for images. Used by both UI modules.

Implementation

To keep this post as a summary, i only included the implementation of the core part of the app in this section.

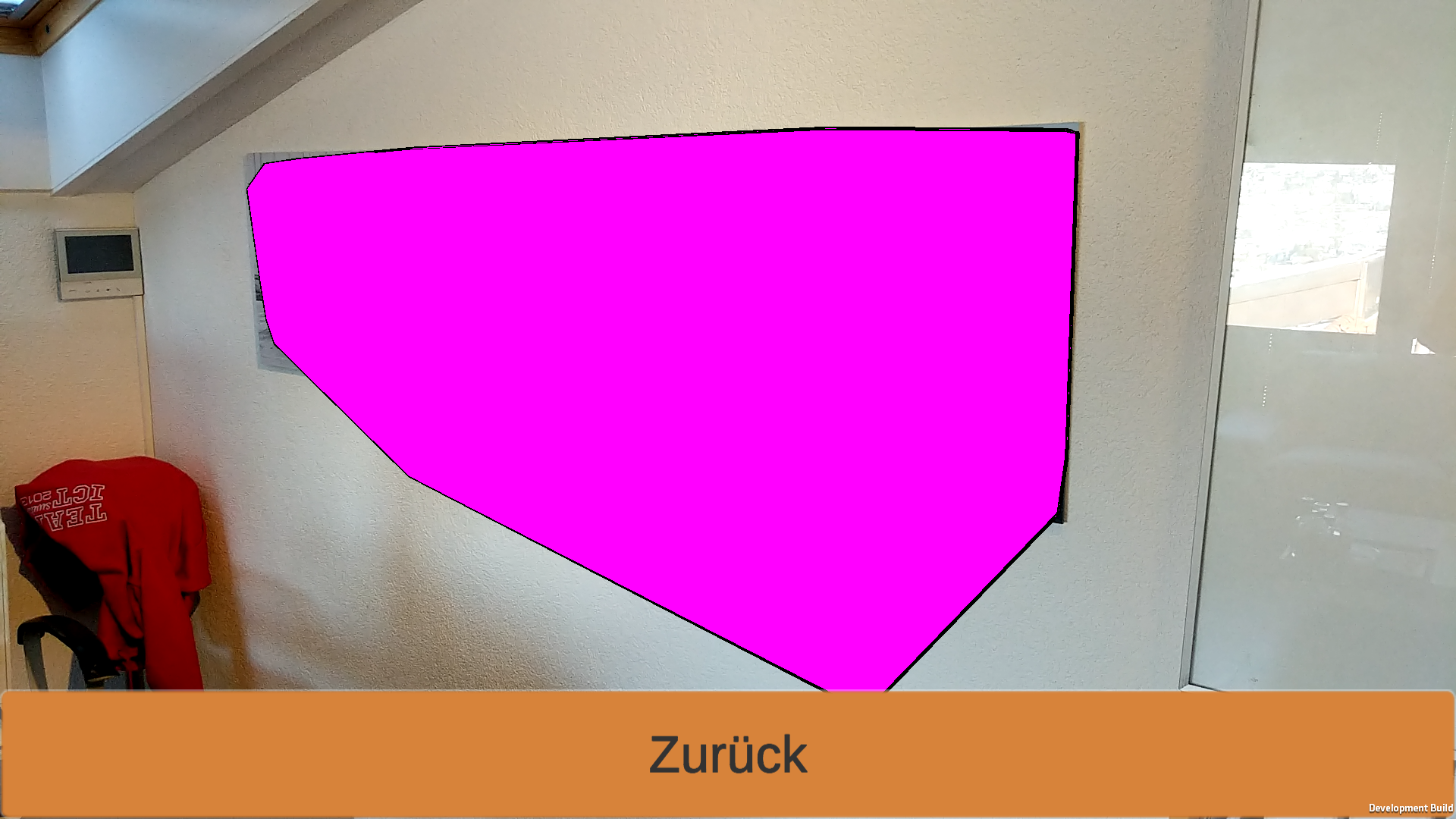

Wall detection

For the detection of walls, the ARPlaneManger component from the ARFoundation is used. As soon as a surface is detected, a PlaneAdded Event is triggered and the plane is visualized in the scene with a preconfigured Prefab Asset. In debug mode, the entire surface is colored to better visualize the progress of the detection process. In the image you can see that the detected planes do not automatically go along the whole wall, the detection technique by the ARKit/ARCore frameworks do not yet support detailed room mapping. The planes consist of a polygon shape which is continuously renewed and extended when more reference points are found that can be used to expand the polygon surface.

The detection process works best if the environment has many feature points for the computer vision algorithms to use. The AR frameworks then create an approximate 3D model including plane positioning using only the 2D Camera Feed and the gyroscope and accelerometer sensors.

All the detected planes have a Unity transform object. This object contains information such as position and rotation within the scene. In addition, the alignment (horizontal/vertical) of the plane is defined with a property. For this app, only vertical planes (walls) are visualized.

Wall placement

In order to successfully position an image in the user's environment, the following information from the recognized wall is required:

- Distance to the viewer (defined by position in the scene)

- The orientation of the wall from the observer's point of view (defined by rotation in the scene)

To move an image to a specific location on the wall, the center of the device screen is used as the alignment point. This enables intuitive handling by changing the position of the image by moving the device.

To check if a user is pointing at a detected wall, a Raycast is sent on every update frame to check if we hit a polygon plane.

// calculate screen center ( we recalc on each frame to support landscape/portrait changes )

var screenCenter = new Vector2(Screen.width / 2, Screen.height / 2);

// check if we point at the inside of a planes polygon

if (ARSessionOrigin.Raycast(screenCenter, raycastHits, TrackableType.PlaneWithinPolygon))

{

// place image on the first plane hit

PlaceOnHit(raycastHits[0]);

// set active if not already

if (!imageInRoom.activeSelf)

{

imageInRoom.SetActive(true);

}

}The full code including the PlaceOnHit function can be found on the Github project.

As mentioned in the section "Wall Detection", the complete room shape is never fully recognized. With the code shown above, an image can only be positioned on a Plane that gets hit by a Raycast hit inside of a planes polygon.

This limited the range of application when only small planes are detect. After some research i found a way to use the planes with an unlimited area as a Raycast target. This causes the detected plane area to be expanded at the current position with respect to its rotation in all directions.

With the following additional logic, after an initial positioning on the limited polygon area, the system now switches to positioning with the target set to the unlimited area of the plane.

// try raycast to plane with infinity bounds ( this allows to build a endless wall with the world position of the plane )

if (ARSessionOrigin.Raycast(screenCenter, raycastHits, TrackableType.PlaneWithinInfinity))

{

AddDebugLine("plane TrackableType", "WithinInfinity");

// find the placed plane via the id

for (int i = 0; i < raycastHits.Count; i++)

{

var hit = raycastHits[i];

if(hit.trackableId == placedPlaneId)

{

// place on the hit

PlaceOnHit(raycastHits[0]);

}

}

}Now only a small part of a wall has to be detect in order to place an image anywhere on the wall.

Result

The result of this thesis is a proof of concept cross platform mobile app that allows a customer of an online store to search for and choose an image and place it on any detected wall in his own room.

I listed the main feature of the app including some illustrative gifs.

Image Selection

To search for images to place on a wall the REST API of a wall decor e-commerce system is used.

Vertical Plane Detection

After an image is selected, the AR Scene opens and starts searching for vertical planes. Once a plane is found, the image gets automatically placed on the plane. The center of the screen is used to control the position on the plane.

Touch

The position of the placed image can be modified using touch gestures.

Fixed Image

Once satisfied with the current position, a tap on the image fixes it in place.

Light estimation

To achieve a more realistic visualisation, the rendering of the image object is dynamically adjusted using light estimation data provided by the AR frameworks to match the brightness of the environment.

Image Format dimensions

The customer can choose a image dimension via a dropdown that is placed in AR underneath the image. The dimensions do match real world centimeters. The implementation of this feature was not as complicated has i had thought. The Unity coordinate system is already defined in meter units. And the AR frameworks provide the mapping of the real world to the unit coordinate system. To calculate the right scale factors for each format, i had to explicitly define Unit/Pixel constants when creating a sprite for the image. The accuracy of the dimensions to the real world is quite good with an error range of about 10%.

Conclusion

After many hours of experimenting and researching i came to the following conclusions.

State of mobile AR frameworks

The augmented reality frameworks for mobile devices made enormous progress in the recent two years (2017-2019). The recognition of surfaces without the help of any markers was a very complex task that could not be easily implemented without in-depth computer vision and sensor knowledge.

The two most popular frameworks ARKit (Apple) and ARCore (Google) allow the analysis of the user's environment by only using a camera feed and IMU (Accelerometer/Gyroscope) sensors, those sensors are available in the majority of current mobile devices. These frameworks give a variety of developers the ability to take advantage of these features and implement applications on top of them.

The current mobile AR frameworks all have proprietary interfaces and are platform-specific. For this reason, there is a great demand for a high level interface for the development of platform-independent augmented reality applications. This is exactly what ARFoundation, the framework used in this thesis, is trying to do. This framework offers a further abstraction layer over the platform specific apis.

The development environments for Augmented Reality are not yet mature enough and still have a lot of potential for improvement to save the developer valuable time in the development of AR applications. During this project I had to test all AR functions directly on the devices to be able to test the functionality. Remote debugging is already supported, but this isn't really practical with a game render loop, because a once a breakpoint is hit the whole application is halted. Long build times with many dev/test iterations extend the development process enormously. In the near future, iterative development will be possible directly in the development environment by using a previously scanned environment.

Accuracy of wall recognition

The recognition of a surface is the essential component for the successful visualization of an image the users environment. If no surface is detected, visualization is not possible. The recognition functions of the frameworks are strongly dependent on the available feature points in the environment. With the current state of surface detection algorithms it is almost impossible to place an image on a completely white wall without any feature points.

Benefits for customers

The goal of the mobile app developed during this thesis was to convince the customer to make a purchase decision by being able to visualize the images in his own living room. The successful detection of a wall is crucial in order to leave a positive impression on the user in most use cases. It is to be assumed, that most use cases of visualization for an image would want to be visualized on a wall that is still empty. Therefore is the probability high that the app will be pointed at a complete white wall. With the current limitation, that completely white walls are not yet detectable can significantly damage the app's goal of making it easier for customers to make a purchase decision.

Outlook

The well-known Unity Game Engine, will release a development environment specifically for AR this year (2019). This development environment, with the abbreviation MARS (Mixed Augmented Reality Studio) should simplify the development of AR applications significantly and improve the iterative process by enabling simulations within the IDE. I am optimistic that better tools and technologies will help to reduce the development effort, so that in the near future a better AR application can be created with fewer resources.

Further iterations of AR frameworks and AR specific hardware sensors like for instance depth sensors, the detection of walls will be improved greatly and with more accuracy. This makes the case that in the near future such an applications for indoor decor will be able to consistently provide a positive experience and a great benefit in the majority of use cases.

Once the Apple iPhone will include a rear facing depth sensor, same as the iPhone X already contains one in the front for FaceID, other phone manufactures will match the hardware and AR apps can benefit hugely from those sensors. One can make the case that Computer Vision alone already can build 3D models of the environment, which is true, but they require more features points and processing power and are not as accurate when compared to a model built with depth sensor data.

In my opinion AR will definitely get a big and fast adoption as soon as practical AR Glasses are available for the consumer space. In combination with an AR Cloud / Mirrorworld the new potential use cases are endless.

Closing

If you have any questions i am happy to answer them, just leave a comment below.

Otherwise i would love to hear about your experiences with AR and your predictions of the future of Augmented Reality.

Thanks for reading.